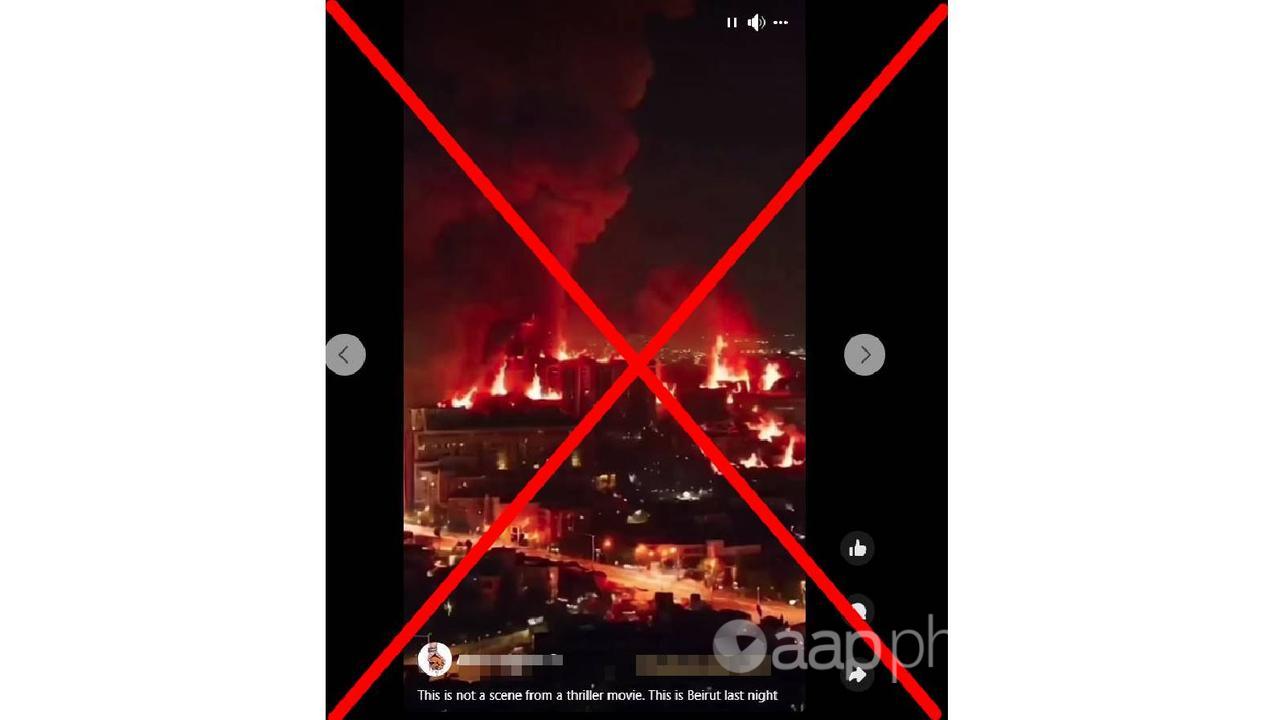

AAP FACTCHECK – A video shows Beirut ablaze following Israel’s bombing of the Lebanese capital, social media users claim.

The claim is false. While Beirut has been the target of air strikes, the video in question has been created with artificial intelligence (AI) technology by a self-described “AI artist”.

The video has been shared widely on social media, including on Facebook accounts that claim it’s a scene from “Beirut last night”.

The short clip at the start of the video shows burning high-rise buildings and streets ablaze.

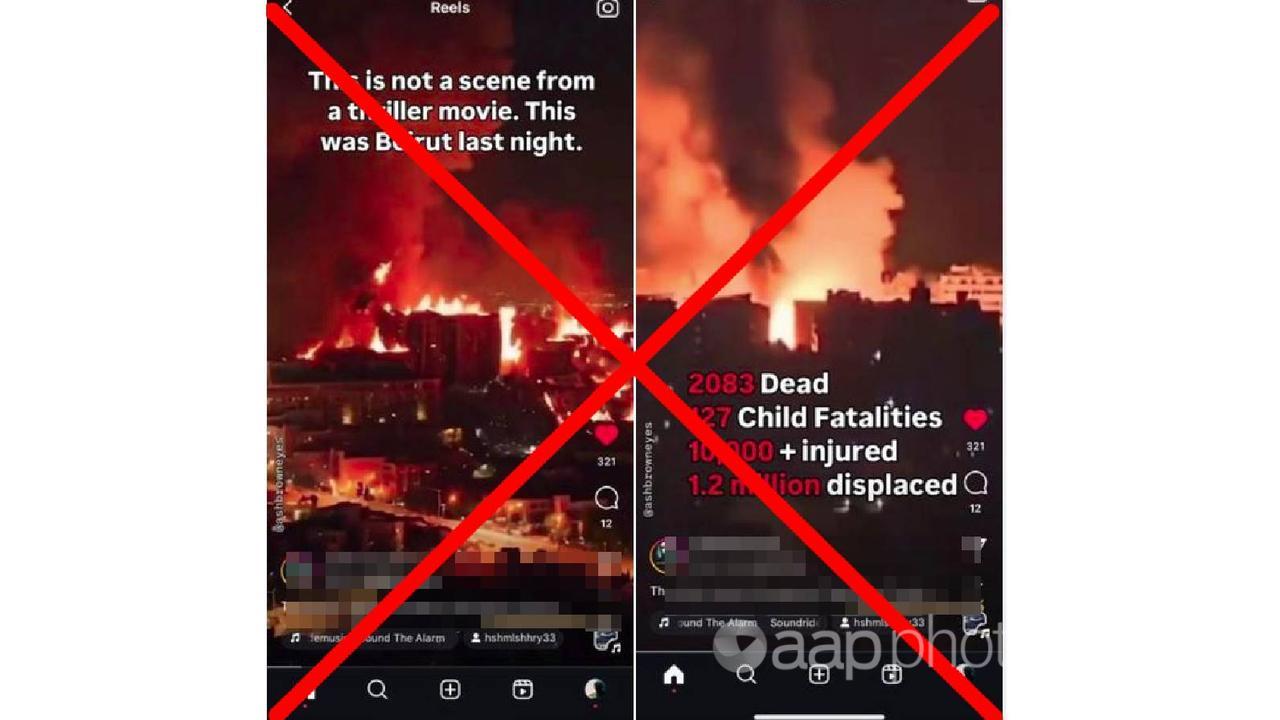

The text accompanying the video reads: “This is not a scene from a thriller movie. This is Beirut last night. Western media coverage is entirely focused on the war with Iran, diverting attention from the brutal slaughter of Lebanese civilians. Death toll has reached 1974. 127 children killed. 9384 injured. 1.2 million displaced.”

However, the source of the clip is a five-second video on the TikTok account of an “AI artist” called digital.n0mad.

The original video is tagged “Creator labeled as AI-generated”.

The digital.n0mad account includes a video playlist titled “Your world is on fire” containing 11 posts. They are AI-generated clips of well-known landmarks on fire, including in New York and Moscow. The clip purporting to be Beirut has generated the most interest, clocking 10.5 million views at the time of writing.

AAP FactCheck referred the post to Scientia Professor Toby Walsh of UNSW Sydney, formally editor-in-chief of both the Journal of Artificial Intelligence Research and AI Communications. He is also the author of Faking It: Artificial Intelligence in a Human World.

Prof Walsh said it used to be easy to identify deep fake images and video, but generative AI is becoming more sophisticated.

“There would be hands with too many or too few fingers,” Prof Walsh said. “There would be continuity problems where objects changed between frames. There would be synchronisation issues between audio and video.

“But generative AI has advanced so rapidly that most of these have disappeared, and the only real safeguard are watermarks and metadata that tell you about the data’s provenance.

“With these videos, there’s very little to tell you that they’re fake. It requires a user to be sceptical. Do you know (and trust) who provided the video? Is there a watermark? Does the metadata tell you anything?”

Prof Walsh said there were multiple tools that could add fire to a video.

Some telltale signs in the Beirut video include repeating light and shadow patterns in the bottom left corner and streaks of blazing light running down the main street in the foreground.

The second clip in the video appears to be legitimate footage taken in Beirut and dated October 5, as broadcast on Sky News.

The Verdict

False – The claim is inaccurate.

AAP FactCheck is an accredited member of the International Fact-Checking Network. To keep up with our latest fact checks, follow us on Facebook, Twitter and Instagram.

All information, text and images included on the AAP Websites is for personal use only and may not be re-written, copied, re-sold or re-distributed, framed, linked, shared onto social media or otherwise used whether for compensation of any kind or not, unless you have the prior written permission of AAP. For more information, please refer to our standard terms and conditions.