Images and videos generated by artificial intelligence (AI) will be used to try to influence Australian voters at the ballot box this year, experts are warning.

It will be the first time such technology is widespread during an Australian federal election, with academics predicting certain voting groups will be specifically targeted.

Experts told AAP FactCheck such tactics can be expected from across the political spectrum – although the most egregious examples are unlikely to come directly from the major parties.

Terry Flew, a professor of digital communications at the University of Sydney, said people will be testing the technology to see what they can get away with.

“It’s a bit like cinema in the sense you’re really looking for what’s the most outlandish special effect you can create that will wow people in the cinema … what’s the image you can generate that maximises impact without necessarily being seen to cross a line?”

Professor Flew said the US election gave a good indication of what we can expect, noting a lot of material was specifically targeted, including Trump-related material being pushed towards African-American men.

Rather than being targeted at those thought to be susceptible, he said the motivation was targeting groups where most could be gained.

In the Australian context, he predicted the Chinese Australian and Indian Australian communities could expect to be targeted.

“They are not considered to be rusted on to any one political party and they can certainly have a significant impact in a number of key electorates,” he said.

J Rosenbaum, an AI artist and researcher at RMIT University, also said the recent US election provided clues as to what we could expect in the federal election campaign.

Dr Rosenbaum pointed to AI images of the aftermath of Hurricane Helene, which smashed the US during the election campaign.

“You had these aftermath images with messages like ‘the Democrats aren’t doing anything to help this poor little girl and her puppy’.”

Elsewhere, there were AI images of a triumphant Donald Trump, or of Kamala Harris depicted as communist.

Some of the images are not intended to directly deceive an audience but are about fuelling a groundswell of support, feeling or momentum, Dr Rosenbaum said.

TJ Thomson, also from RMIT, said we have already seen some of this content in Australia, specifically in Queensland.

In 2020 the Advance Australia group published a press conference, clearly described as “fake”, featuring then-premier Annastacia Palaszczuk declaring the state was “cooked”.

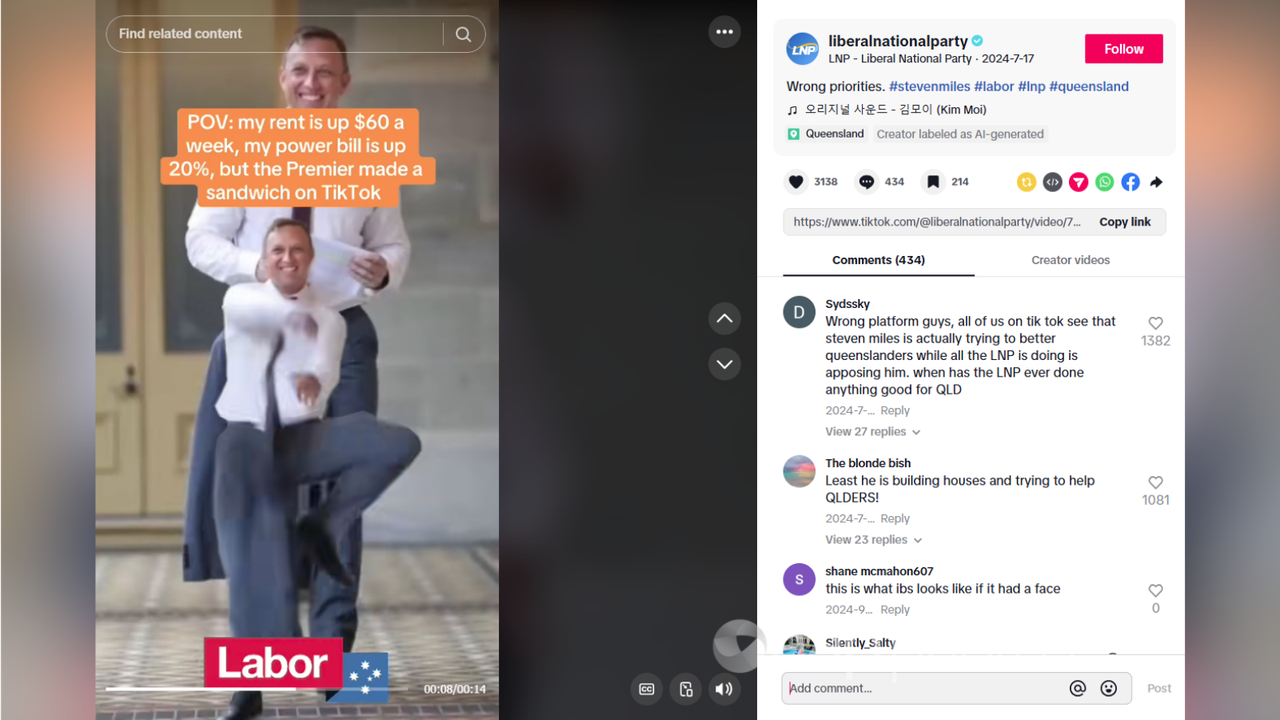

In 2024, Queensland’s LNP published a clearly fake video of then-Queensland Premier Steven Miles performing a comedy dance.

The Australian Labor Party (ALP) published a similar fake video of Peter Dutton dancing to criticise his party’s support for nuclear energy.

Dr Thomson said these “cheapfakes” were intended to put doubt in people’s minds, to “sow seeds of distrust” and create polarisation.

While the Miles and Dutton examples came from the LNP and ALP respectively, Prof Flew said the more egregious deepfake content is more likely to come from the fringes.

“You won’t have the major political parties putting it out,” he said. “It may be lobby groups, advocacy and activist groups, perhaps with a loose affiliation to some of the major parties.”

Western Sydney University media literacy expert Tanya Notley said we should also be prepared for content from state-backed actors, noting a lot of the content tracked during the US election came from Russian state operatives.

Associate Professor Notley also said that while the content may not directly come from the major parties, that doesn’t mean they can’t take advantage of it.

She raised the example of the AI-generated fake images of fans of the singer Taylor Swift (Swifties) backing Donald Trump, which the then-presidential candidate shared with his followers.

“They [the images] often get amplified by news organisations,” Assoc Prof Notley said.

“People are then thinking and talking about those politicians. So sometimes that’s really the main aim, to get people talking about Swifties, this content and talking about those politicians.”

Recent research conducted by Assoc Prof Notley suggests Australian adults are not well placed to tackle generative AI.

Her team questioned and then tested a cohort who said they didn’t understand and were worried about generative AI.

The sample was also given a basic information verification test, with just three per cent scoring more than 50 per cent.